Wrangling of OpenStreetMap Data from Zürich¶

In this project I will have a look at the OpenStreeMap data of the area around Zürich in Switzerland. The goal is to apply data wrangling techniques to asses the data quality and clean possible uniformities and inconsistencies. Finally, the data will be passed to a structured SQL database using the SQLite engine. Basic SQL querries and aggregations as well as the sqlite3 Python API will be used to gather statistics and interesting insights about the data set.

During the project the following Python libraries were used:

import csv

import matplotlib.pyplot as plt

% matplotlib inline

import mplleaflet

import pprint

import re

import sqlite3

import xml.etree.cElementTree as ET

The csv and xml libraries were used for data gathering and parsing while sqlite3 was required for database interactions. mplleaflet is a simple library that allows to convert matplotlib visualisations into zoomable Leaflet maps.

Map Area¶

Zürich, Switzerland

Data Exploration¶

I created a few simple helper functions to explore the xml data. They can be found in the explore.py file. They were used to count the different root elements and their children to get a feeling for the structure and dimensions of the file.

A output returned by the functions can be found in the code cell below.

# osm xml file

file = 'zurich.osm'

root_attributes, child_attributes = get_file_structure(file)

print("Root elements and their attributes:")

pprint.pprint(root_attributes)

print()

print("Children and their attributes within the root elements:")

pprint.pprint(child_attributes)

print()

From these results I could get a clear picture of the tree structure of the OSM XML file.

<node id lat lon user uid version changeset timestamp> [2 018 926]

<tag k v /> [340 957]

</node>

<way id user uid version changeset timestamp> [302 080]

<tag k v /> [936 274]

<nd ref /> [2 530 372]

</way>

<relation id user uid version changeset timestamp> [4 765]

<tag k v /> [21 916]

<member ref role type /> [135 999]

</relation>

We can see that the data is split into three different root element categories: node, way, and relation.

Nodes consist of single points in space defined by their latitude and longitude. Ways contain tags and ordered lists of nodes that define their shape. This nodes are clearly identified by the unique node id in the ref attribute of their nd children. Relations are used to describe logical or geographical relationships between their member childrens than can either be node or way types. All three different root elements can have tag children that contain further information stored as key and value pairs. The numbers inbetween square brackets denote the number of occurences of the element in that line.

My next step in the data exploration process was to have a look at the different keys and values that appear in the tag children of the different root elements. Therefore I used further exploration helper functions to count the different keys in the tag children of the specified root element and to count the different values associated with a certain key.

To show how these functions work, they will be used in the code block below to print out the most frequent keys within all tag elements and the different surface type values that occur in way root elements.

# different keys within all root elements

tag_keys = get_tag_keys(file, ['node', 'way', 'relation'])

# most frequent values associated with "building" key

way_tag_surface_values = get_tag_key_values(file, ['way'], 'surface')

# print out top 20 entries of both lists

print()

pprint.pprint(tag_keys[:20])

print()

pprint.pprint(way_tag_surface_values[:20])

Data Auditing - Problems Encountered in the Map¶

While browsing trough the values of some tag key attributes I did not discover any major data inconsistencies but only a few formatting variations. I noticed four main formatting problems:

Housenumbers in Switzerland are sometimes combinations of numbers and letters. Flats in the same apartement building for example might have the same housenumber but are then distinguished by adding a letter after the number (1A, 1B, 1C,...). I want all of these letters to be uppercase.

If cities or villages with the same name appear in multiple locations, they are usually suffixed with abbreviation of the state they are in. For the canton of Zürich, this abbreviation is ZH. I want all locations that have this suffix to be in the same format, "(ZH)". Furthermore, I want to fix a few abbreviations that occur, such as b., a., a.A..

Not all website values have the same formatting. I will harmonize them so that each entry starts with "http://www.".

The format for phone numbers should be standardized to "+41 xx xxx xx xx".

The audit() function from the audit.py file was used to print out data entries that do not follow the desired specifications. This allowed to see which patterns needed to be cleaned. As an example the audit function for phone numbers is shown below. If the audit_phone_number() function finds a number that does not follow the standard Swiss format it will be added to the bad_phone_number list.

# audit phone

def audit_phone_number(phone_number, bad_phone_numbers):

# check if phone number follows the standard format

phone_number_re = re.compile('\+[0-9]{2} [0-9]{2} [0-9]{3} [0-9]{2} [0-9]{2}') # +41 xx xxx xx xx

m = phone_number_re.search(phone_number)

if not m:

bad_phone_numbers.append(phone_number)

To address all the issues shown above I created cleaning functions that will be applied to the respective tag values before storing them in the csv files that will be imported into the database. To continue the example form above only the cleaning function for phone numbers is shown here. All the others can be found in the clean.py file.

def clean_phone_number(phone_number):

phone_number_re = re.compile('\+[0-9]{2} [0-9]{2} [0-9]{3} [0-9]{2} [0-9]{2}')

m = phone_number_re.search(phone_number)

if not m:

# remove '(0)', '-' and ' '

for ch in ["-", "(0)", " "]:

if ch in phone_number:

phone_number = phone_number.replace(ch, "")

# set correct starting sequence

if phone_number[:3] != "+41":

if phone_number[:2] == "04" or phone_number[:2] == "07":

phone_number = "+41{}".format(phone_number[1:])

# set spacing

phone_number = "{} {} {} {} {}".format(phone_number[:3], phone_number[3:5], phone_number[5:8],

phone_number[8:10], phone_number[10:])

return phone_number

SQL Database¶

To be able to store the OSM data in a SQL database, the xml input file will be transformed into a tabular dictionary format. This format can easily be stored in csv files which will then be imported into the different SQL tables.

The shape_element() function takes an iterparse element object as input and returns a formatted dictionary. If the element top level tag is equal to "node", the function will return the following dictionary structure:

{'node': {'node_id': ...,

'lat': ...,

'lon': ...,

'user': ...,

'uid': ...,

'version': ...,

'changeset': ...,

'timestamp': ...},

'node_tags': [{'node_id': ...,

'key': ...,

'value': ...,

'type': ...},

{'node_id': ...,

'key': ...,

'value': ...,

'type': ...},

...]

}The node field contains all the attributes of the node root element. The node_tags field contains a list with dictionaries for all the secondary tag children of the passed root element. 'node_tags' entries are are connected to their parent elements based on the unique node_id values. Furthermore, if the tag k attribute contains any problematic characters, the tag is ignored, but if the tag contains a ":", the tag type well be set to the characters coming before the ":". If the tag key does not contain a colon, the type field will be set to "regular". Before any v attributes are written to the value field, they are cleaned using the cleaning functions from the section above.

Similar dictionaries were shaped for "way" and "relation" root elements. The shaping function can be found in the shape.py file. The main purpose of the shaping functions is to convert the XML elements into a format that can easily be stored in a csv file. Each csv file will later represent a distinct table in the SQL database. The convert_to_csv() function in the main.py file iterates trough the all root elements, collects the data for each one in a shaped dictionary and writes them line-by-line to the different output files.

Before we can import the csv files and store the data in a databse we need to create the empty SQL databse and define the structure of its tables. Instead of doing this manually trough the SQLite command line interface, I used the Python API.

def create_table(conn, create_table_sql):

"""Create new sql table based on the command given as string."""

c = conn.cursor()

c.execute(create_table_sql)

# SQL commands

create_nodes_table_sql = """CREATE TABLE IF NOT EXISTS nodes (

node_id INTEGER PRIMARY KEY NOT NULL,

lat REAL,

lon REAL,

user TEXT,

uid INTEGER,

version INTEGER,

changeset INTEGER,

timestamp TEXT

);"""

create_nodes_tags_table_sql = """CREATE TABLE IF NOT EXISTS nodes_tags (

node_id INTEGER,

key TEXT,

value TEXT,

type TEXT,

FOREIGN KEY (node_id) REFERENCES nodes(id)

);"""

create_ways_table_sql = """CREATE TABLE IF NOT EXISTS ways (

way_id INTEGER PRIMARY KEY NOT NULL,

user TEXT,

uid INTEGER,

version INTEGER,

changeset INTEGER,

timestamp TEXT

);"""

create_ways_tags_table_sql = """CREATE TABLE IF NOT EXISTS ways_tags (

way_id INTEGER,

key TEXT,

value TEXT,

type TEXT,

FOREIGN KEY (way_id) REFERENCES ways(id)

);"""

create_ways_nodes_table_sql = """CREATE TABLE IF NOT EXISTS ways_nodes (

way_id INTEGER,

node_id INTEGER,

position INTEGER,

FOREIGN KEY (way_id) REFERENCES ways(id),

FOREIGN KEY (node_id) REFERENCES nodes(id)

);"""

create_relations_table_sql = """CREATE TABLE IF NOT EXISTS relations (

relation_id INTEGER PRIMARY KEY NOT NULL,

user TEXT,

uid INTEGER,

version INTEGER,

changeset INTEGER,

timestamp TEXT

);"""

create_relations_tags_table_sql = """CREATE TABLE IF NOT EXISTS relations_tags (

relation_id INTEGER,

key TEXT,

value TEXT,

type TEXT,

FOREIGN KEY (relation_id) REFERENCES relations(id)

);"""

create_relations_members_table_sql = """CREATE TABLE IF NOT EXISTS relations_members (

relation_id INTEGER,

type TEXT,

node_id INTEGER,

way_id INTEGER,

role TEXT,

FOREIGN KEY (relation_id) REFERENCES relations(id),

FOREIGN KEY (node_id) REFERENCES nodes(id),

FOREIGN KEY (way_id) REFERENCES ways(id)

);"""

# list containing all querries to create the tables

sql_tables = [create_nodes_table_sql, create_nodes_tags_table_sql,

create_ways_table_sql, create_ways_tags_table_sql, create_ways_nodes_table_sql,

create_relations_table_sql, create_relations_tags_table_sql, create_relations_members_table_sql]

# database name

db = "zurich.db"

# create database and set up the tables

conn = sqlite3.connect(db) # creates new db if it does not already exist

for t in sql_tables:

create_table(conn, t)

conn.close()

Finally, the csv files can be imported into the SQL database. For this step I also used the SQLite Python API. The fields of each line of the csv file are aggregated in a list of lists. The executemany() method is then used to execute the SQL command against all the given parameter sequences.

def import_csv_file_into_db(db_name, file_name, table_name):

"""

Import csv file into database. The table needs to exist already.

"""

with open(file_name, 'r', encoding='utf-8') as f:

f_reader = csv.DictReader(f)

header = f_reader.fieldnames

# list of lists containing the csv rows for the db import

db_data = [[r[k] for k in header] for r in f_reader]

# construct sql command

columns = "({})".format(', '.join(header)) # concatenate field names

values = "({})".format(', '.join(['?' for i in range(len(header))])) # number of ? equal to number of fields

sql_command = "INSERT INTO {} {} VALUES {};".format(table_name, columns, values)

# connect to db and import the data

conn = sqlite3.connect(db_name)

cursor = conn.cursor()

cursor.executemany(sql_command, db_data)

conn.commit()

conn.close()

print("Import of {} successful!".format(file_name))

# list containing all csv file paths

files = [nodes_path, nodes_tags_path,

ways_path, ways_tags_path, ways_nodes_path,

relations_path, relations_tags_path, relations_members_path]

# get table names for the import function

conn = sqlite3.connect(db)

cursor = conn.cursor()

cursor.execute("SELECT name FROM sqlite_master WHERE type='table';")

tables = [t[0] for t in cursor.fetchall()]

conn.close()

# write each csv file to its correpsonding table

for f, t in zip(files, tables):

import_csv_file_into_db(db, f, t)

Overview of the Data¶

This section contains basic information and statistics about the SQL database.

During data wrangling and the set-up of the SQL database the following files were created and used:

zurich.osm ................. 471.1 MB

nodes.csv .................. 160.1 MB

nodes_tags.csv ............. 12.2 MB

ways.csv ................... 17.4 MB

ways_nodes.csv ............. 59.9 MB

ways_tags.csv .............. 32.2 MB

relations.csv .............. 0.3 MB

relations_tags.csv ......... 0.8 MB

relations_members.csv ...... 3.6 MB

zurich.db .................. 261.3 MB

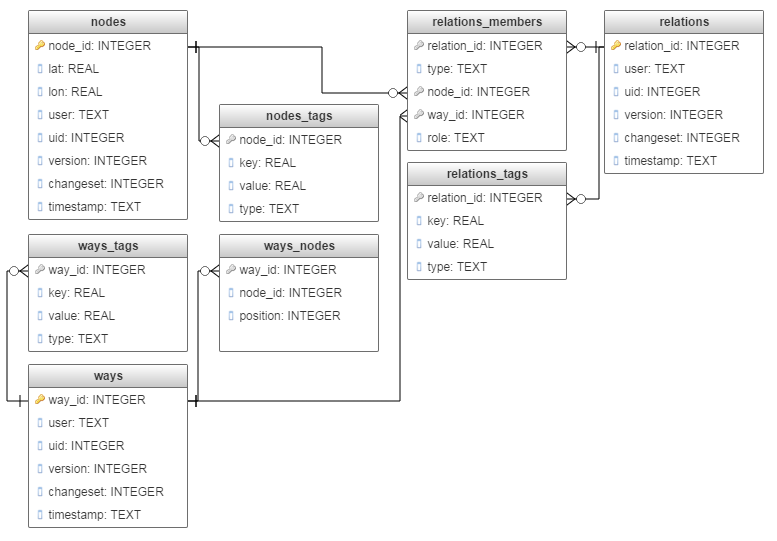

The following figure shows the structure of the "zurich.db" database. It displays all the tables with their respective columns and how they are interconnected.

Explain relations_members table with way_id and node_id!!!

Number of nodes, ways and relations¶

Using SQL commands with the structure displayed below I counted the number of entries in each table containing the three different types of root elements.

SELECT count(*) FROM nodes;

The results of the querries are summarized in the following table:

| Table | Number of Rows |

|---|---|

| nodes | 2'018'926 |

| nodes_tags | 340'957 |

| ways | 302'080 |

| ways_tags | 936'274 |

| ways_nodes | 2'530'372 |

| relations | 4'765 |

| relations_tags | 21'916 |

| relations_members | 135'999 |

These numbers correspond with the number of elements we counted in the XML file during data exploration.

User Statistics¶

Number of unique users that contributed to the dataset:

SELECT count(DISTINCT user)

FROM (SELECT user FROM nodes

UNION ALL SELECT user FROM ways

UNION ALL SELECT user FROM relations);

2255

Top 10 users regarding number of contributions:

SELECT user, num, num / 2325771.0 * 100.0 AS perc

FROM(

SELECT user,

count(*) AS num

FROM (SELECT user FROM nodes

UNION ALL SELECT user FROM ways

UNION ALL SELECT user FROM relations)

GROUP BY user

ORDER BY num DESC)

LIMIT 10;

user | num | perc

-------------|--------|-----------------

mdk | 469461 | 20.1851773024945

SimonPoole | 270031 | 11.6103864051964

Sarob | 145852 | 6.27112471520197

feuerstein | 107720 | 4.63158238708798

joshx | 76684 | 3.29714318391622

ponte1112 | 76593 | 3.2932305029171

ueliw0 | 62249 | 2.67648878586929

captain_slow | 55334 | 2.37916802643081

dulix9 | 47797 | 2.05510344741593

kuede | 39949 | 1.71766695861286

By dividing trough the total number of root elements of 2'325'771 we get the overall contributions of the users as a percentage value. It shows that the top 5 contributiors added about 50 % of all the data entries.

Additional Data Analysis¶

Amenities¶

Most common amenities:

SELECT value, count(*) AS num

FROM (SELECT value FROM nodes_tags WHERE key="amenity"

UNION ALL SELECT value FROM ways_tags WHERE key="amenity"

UNION ALL SELECT value FROM relations_tags WHERE key="amenity")

GROUP BY value

ORDER BY num DESC

LIMIT 10;

value | num

--------------- | ----

parking | 3630

bench | 2803

restaurant | 1767

drinking_water | 1220

school | 827

waste_basket | 817

post_box | 781

vending_machine | 642

bicycle_parking | 554

recycling | 438

Restaurants and their Cuisines¶

To have a closer look at the restaurant nodes I extracted their longitudes and latitudes and displayed them in a Leaflet map.

# sql query

sql_query = """

SELECT lon, lat

FROM nodes, nodes_tags

WHERE nodes_tags.node_id = nodes.node_id

AND nodes_tags.key="amenity"

AND nodes_tags.value="restaurant";

"""

conn = sqlite3.connect('zurich.db')

cursor = conn.cursor()

cursor.execute(sql_query)

results = cursor.fetchall()

conn.close()

# extract lon and lat

lon, lat = [x for x, y in results], [y for x, y in results]

# plot restaurant positions on mplleaflet map

f = plt.figure(figsize=(15, 8))

plt.scatter(lon, lat)

plt.xlabel("Longitude")

plt.ylabel("Latitude")

mplleaflet.display()

Bar plot of the 10 most popular restaurant cuisines in Zürich:

# sql query

sql_query = """

SELECT nodes_tags.value, count(*) AS num

FROM nodes, nodes_tags

WHERE nodes_tags.node_id = nodes.node_id

AND nodes_tags.key="cuisine"

GROUP BY nodes_tags.value

ORDER BY num DESC

LIMIT 10;

"""

conn = sqlite3.connect('zurich.db')

cursor = conn.cursor()

cursor.execute(sql_query)

results = cursor.fetchall()

conn.close()

# index, labels and counts for the bar plot

ind = range(len(results))

l, c = [l for l, c in results], [c for l, c in results]

fig, ax = plt.subplots(figsize=(8, 6))

ax.bar(ind, c)

ax.set(title="Most popular Cuisines in Zürich", ylabel="Number of Restaurants", xticks=ind)

ax.set_xticklabels(l, rotation=45)

ax.grid(alpha=0.4, axis='y')

plt.show()

Sports¶

Most popular sports found in Zürich:

# sql query

sql_query = """

SELECT value, count(*) AS num

FROM (SELECT value FROM nodes_tags WHERE key="sport"

UNION ALL SELECT value FROM ways_tags WHERE key="sport"

UNION ALL SELECT value FROM relations_tags WHERE key="sport")

GROUP BY value

ORDER BY num DESC

LIMIT 20;

"""

conn = sqlite3.connect('zurich.db')

cursor = conn.cursor()

cursor.execute(sql_query)

results = cursor.fetchall()

conn.close()

# index, labels and counts for the horizontal bar plot

ind = range(len(results))

l, c = [l for l, c in results[::-1]], [c for l, c in results[::-1]]

fig, ax = plt.subplots(figsize=(8, 6))

ax.barh(ind, c)

ax.set(title="Most popular Sports in Zürich", xlabel="Number of Tags", yticks=ind)

ax.set_yticklabels(l, rotation=0)

ax.grid(alpha=0.4, axis='x')

plt.show()

Additional Ideas for improving the Data Set¶

The first idea that came to my mind when thinking about OpenStreetMap data is why not use the same information from Google Maps. As Google is a huge corporation with limitless resources their data must be more accurate than that of an open source community. But as Google is return driven, I think they will mainly focus on urban areas and big cities where exact and up-to-data mapping is beneficial for their marketing purposes while the OSM community also focuses on more rural areas for more creative purposes such as hiking, cycling or other types of sports (see most popular sports found in Zurich above). With a simple querry we can also count the number of hiking trails in the nodes_tags table which account for about 500 entries.

SELECT count(*) FROM nodes_tags WHERE key='hiking';

498

Therefore I would suggest that one could use the Google Maps API to improve data quality in cities. Map information about shops, restaurants and traffic is probably more accurate there. A big problem here might be that the Google Maps data is owned by Google and we run into copyright issues. But on the other hand, to improve the data in the countryside one could try to combine the map updates with certain sports activities such as Geocaching. The use of mobile GPS during this type of treasure hunt would guarantee accurate location data in remote areas.

Conclusion¶

The review of the OpenStreetMap data of the area around Zürich showed me that the data quality is already pretty high. I did not encounter any major problems regarding data validity and accuracy but just a few minor inconsistencies in formatting. While handling the large XML file is very inefficient and slow, once the data in transferred to the SQL database, the data can be querried in a really fast way trough the command line interface or the Python API.

During data analysis I looked at a few interesting features such as the different users that contributed to the data set and the different restaurants and sport centers available in Zürich. I also touched on the mplleaflet library for the display of geographical nodes on a interactive JavaScript map. Additionally, it could also be used for the visualisation of polygenic ways and relations.